Is YouTube using genAI to alter uploaded videos without telling you?

TL;DR: Yes, but they don’t want it to be called generative AI. They would prefer you call it “improving video quality.”

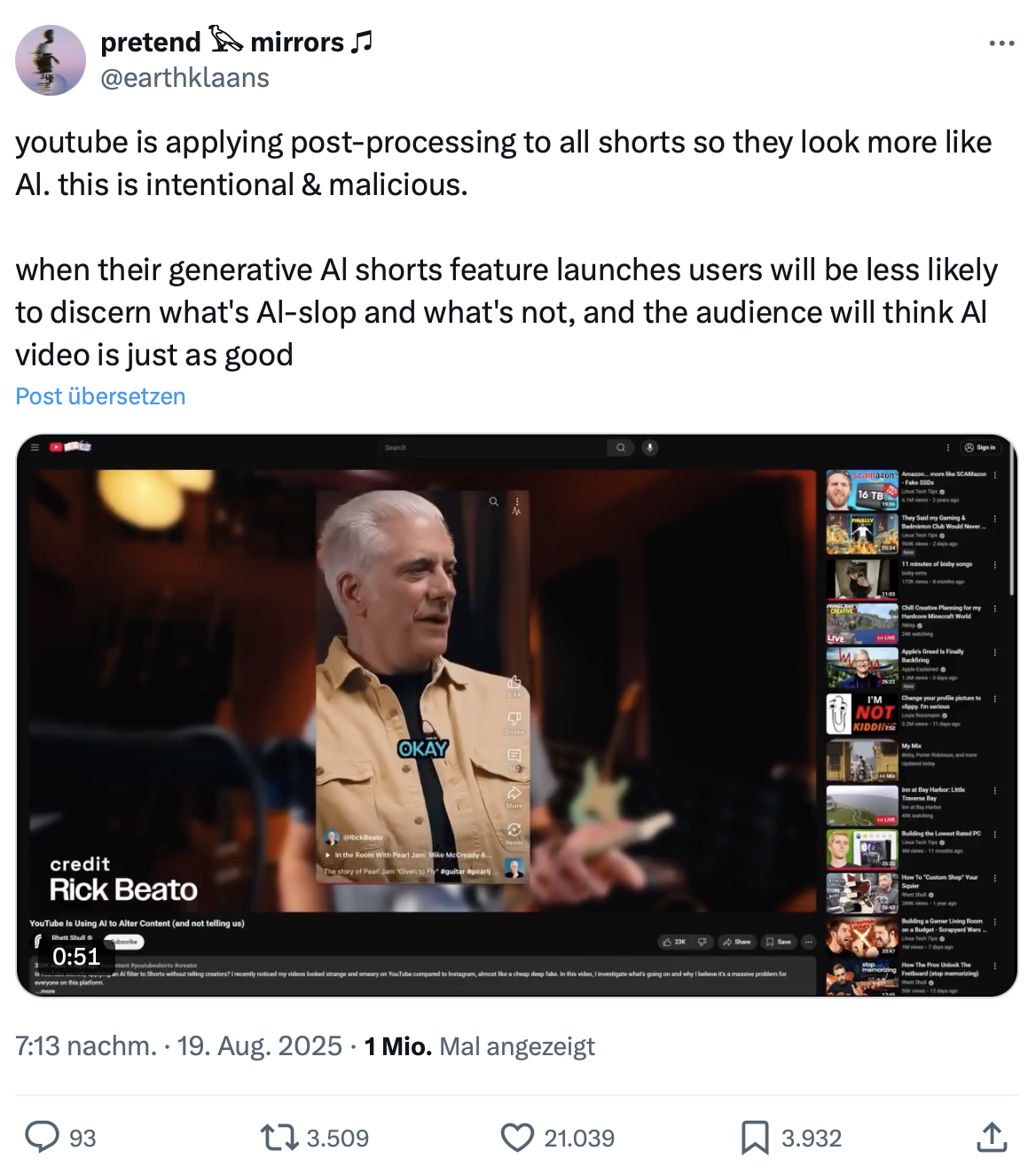

This blew up rather quickly! In his video “YouTube Is Using AI to Alter Content (and not telling us)” Rhett Shull makes the case that YouTube is somehow modifying uploaded shorts using AI, resulting in videos that look AI-generated. This news made it into Tech Linked and a stolen clip from the show on Twitter prompted a reply. An Orwellian reply that tells you that water is, in fact, not wet.

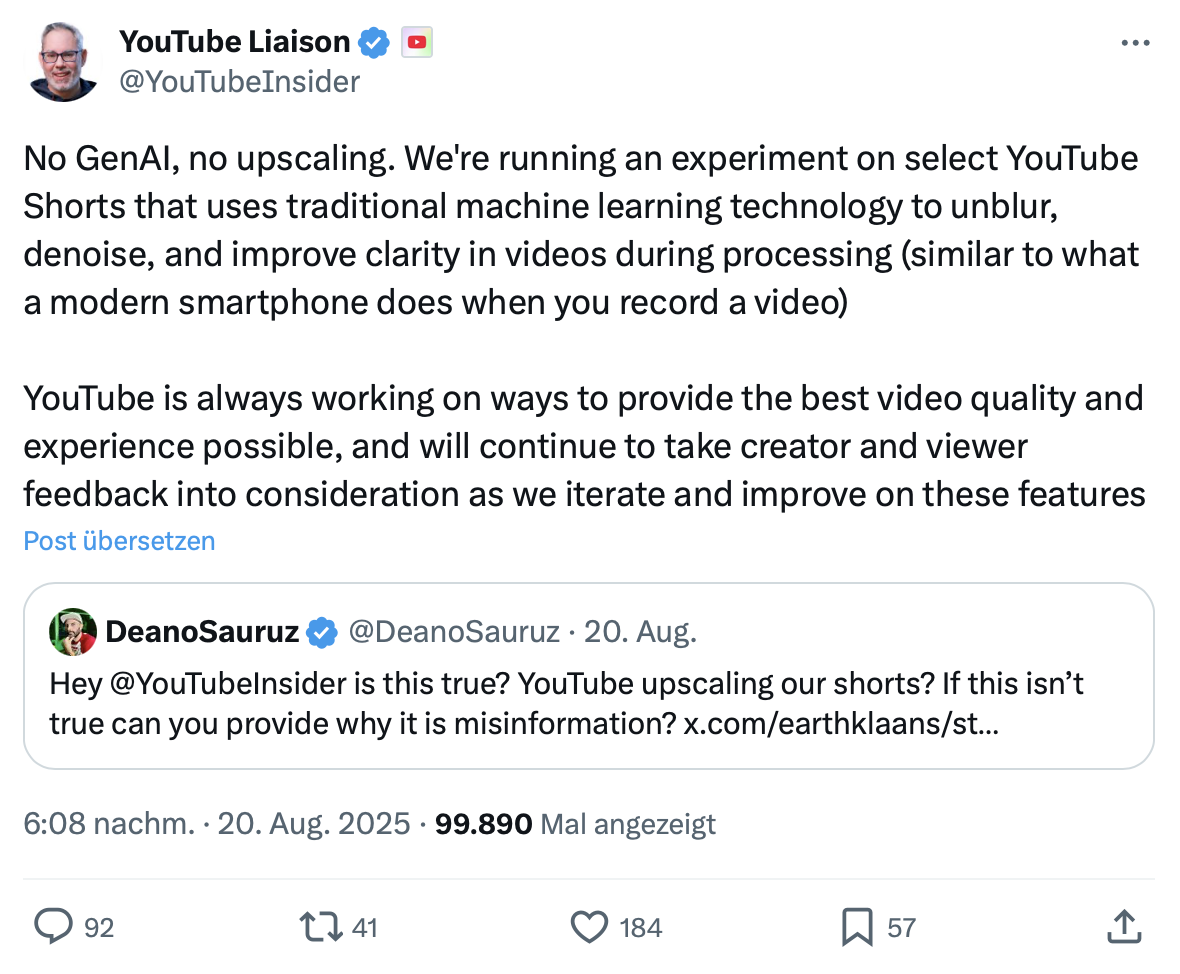

The posts from original to reply in order.

Aside: Please learn to use punctuation, @earthklaans. It doesn’t make you look cool to avoid uppercase letters and commas, it just makes your text harder to read.

Let’s unpack that. The post starts out by saying: “No GenAI”. So, clearly, we’re supposed to be reassured YouTube is not generating any pixels in those videos. It continues with: “We […] use traditional machine learning […] to unblur, denoise, and improve clarity […]”, and for good measure they qualify their use of “traditional machine learning” by comparing it to what a smartphone does when you use the camera.

Here is the rub though: “traditional machine learning”, also known as ML is a form of AI. And YouTube uses this to modify the video to generate pixels the camera sensor did not record. They are inventing, or “generating” data using AI. This is GenAI. They “unblur”, which is to say: they add detail in areas where there was none, inventing pixels. They “denoise”, as in generating pixels that did not exist in the upload. They “improve clarity”, which usually means increasing the brightness-contrast between differently coloured pixels. That last one is the only thing that can be considered non-generative, as it doesn’t invent something that the camera sensor did not see.

Above, I described the reply as Orwellian, because it tells you that what you are seeing is not what you are seeing. Which is, in a way, poetic, considering the reply is about made up image data that the camera sensor did not capture, but YouTube decided to pretend it did.