Write, don’t generate. Or: The bell curve of taste

Robert Kingett’s “The Colonization of Confidence.” stirred an interesting conversation this morning at home, and I want to share a thought from it. Robert writes about how LLMs/AI is the cause of a process writers are going through. And I immediately connected his writing to my own struggle in the field of web-design. You should read his text, all of it. Here is a taste:

"I can't write, Rob!" Leo screams. It is a terrifying sound, a man ripping his own throat open. "I feel so stupid! I look at my words and they look like trash! I can't do it! I'm done! I hate everything I do without the AI!"

I have no idea what to say. Leo comes closer, his hands grasping mine. The force of his grip breaks my heart.

"Rob," he says, and his voice shakes with tears. "I hate this. I hate this."

Yes, an LLM text is probably more likely to sell to more people than a human-written text. An LLM generates the statistical average of taste of an unknown, but large group of people. Think of a bell curve describing how many people find a text attractive, at the edges are texts attractive to very few, the LLM text is right in the middle of it. Many people like the LLM salad with sugary dressing and some corn on the side, fewer like the human made salad with a hint of acidity from real vinegar, some crunchy vegetables and a slice of freshly baked bread. That doesn’t make the LLM text better, it makes it easier to sell.

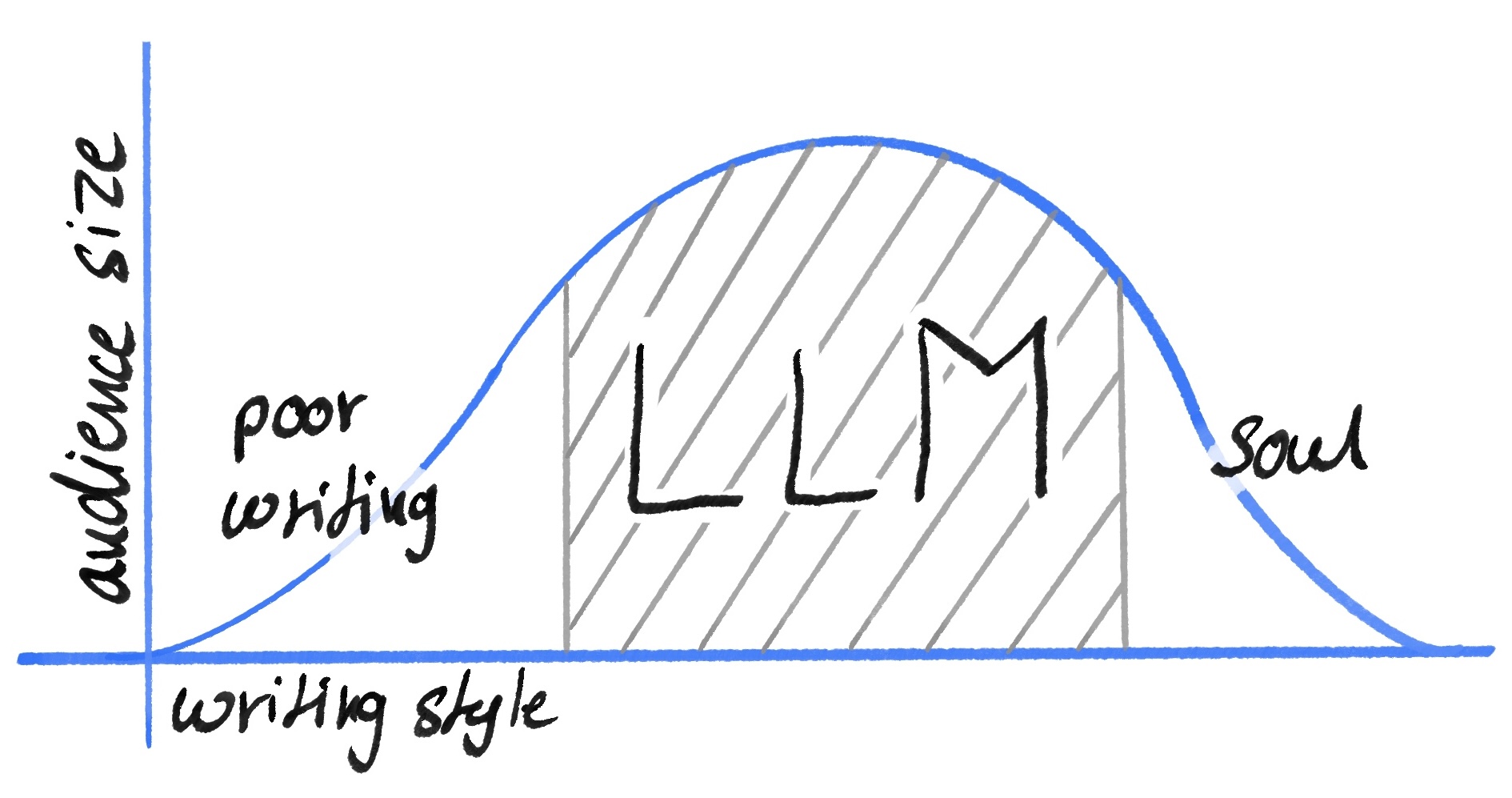

Look at this graph I invented, it shows an imaginary bell curve. The y-axis is the audience size, as in how many people might find your text attractive. The x-axis is the writing style on a scale from poor writing to LLM-text, to soul. I call the last portion soul, because I imagine texts go here that might feel rough to read, or difficult to stomach, but deliver soul, emotion, humanity.

The bell curve I drew shows that most people prefer the statistically average style of an LLM. The center portion, the LLM-portion has the largest audience, people who prefer the text equivalent to drinking sweetened green smoothies, over savouring the complex taste of a salad or a dish with vegetables. To the left of the LLM-portion is one edge of the bell curve, it covers a much smaller area and represents poor writing. Few people enjoy reading poorly written text, but not nobody. Right of the LLM-portion is the area i labeled as “soul.” Again, the audience size is smaller than that of LLM-text. Remember, none of this is based on data, its what I imagine this graph might look like.

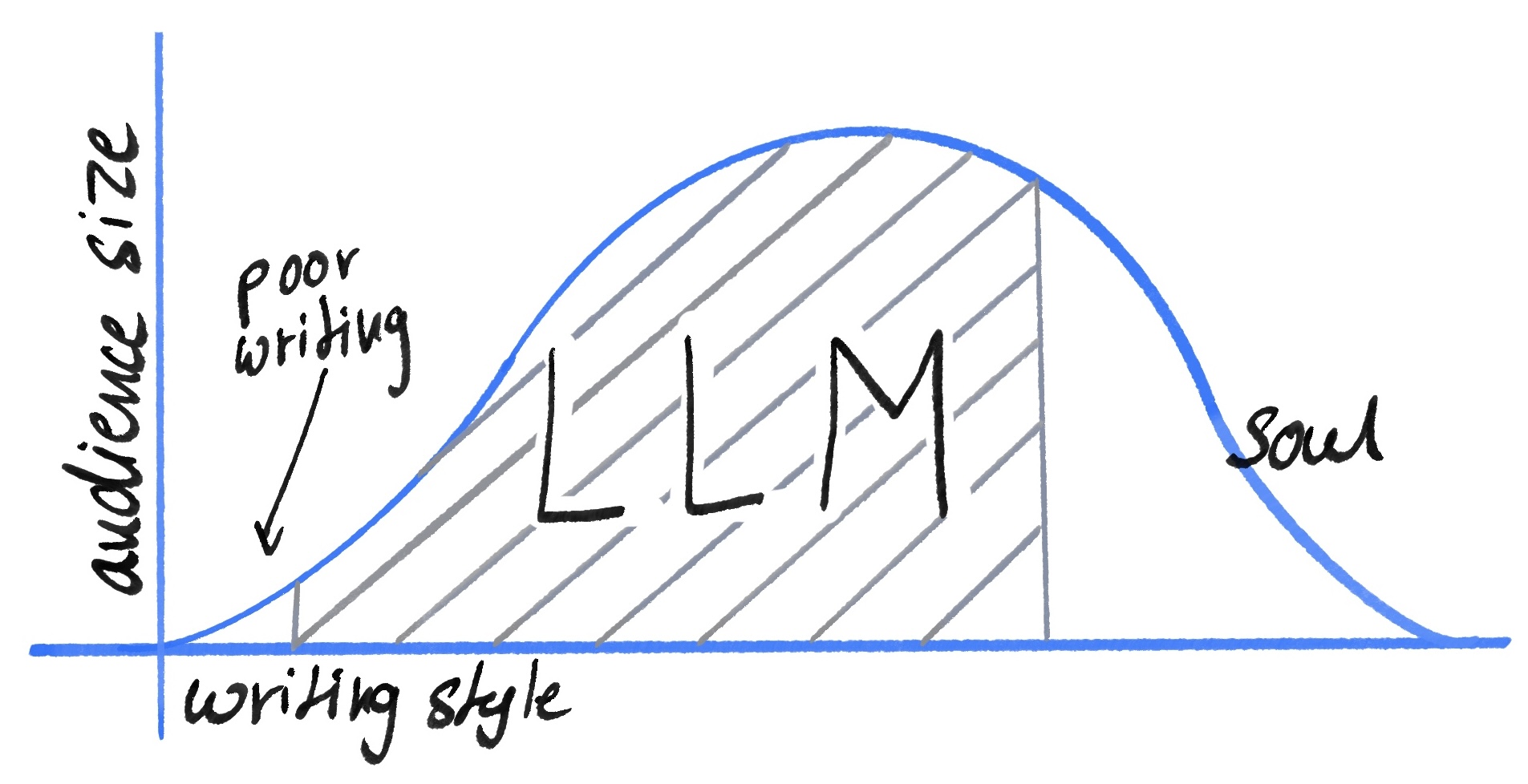

The graph might actually look very different, like so:

This new graph, which I also invented, shows a different distribution. It shows that almost nobody likes poor writing, a lot of people like LLM-text of poor to acceptable quality, and a significant, but small portion of the audience prefers soulful writing. The point is: We don’t know. Or at least I don’t.

In conclusion, I want to get back to the point I made above: It is easer to sell LLM-generated text and that doesn’t make it better text. The market is much bigger, but so is the competition. AI-generated content is racing towards a value of zero, as it gets cheaper and easier to make it. Hell, what even is “good” and “bad”? Its a valuation we assign to something based on our personal values and goals. To an editor under pressure to sell more magazines, the text that sells better is better. To an author, the text expressing humanity and emotion, the gritty text, the text with the rough metaphor might be better. They have different values, their definition of “good” is different. Neither are wrong or right, and we can disagree with either’s values.

Personally, I wish the values of the author in my example produced better sales, eliminating this conflict, and I think that might actually be true. I suspect, if we just allowed ourselves to be human, and stopped editing our humanity away in fear of alienating potential readers and customers, or just rubbing them the wrong way, we’d find that most people prefer the unpolished creations of artists over the smoothed edges of AI-generated content. Let me just end on this: If you care about the text you publish, write, don’t generate. Stop tilting at windmills, trying to convince others. Instead, research ways to spike your texts to corrupt AI models illegally trained on it.